Cherish Your Bugs

Creating a new system (this blog) that focuses on the study of systems: systemology. Let's create dialogue that cherishes bugs rather than ignores them.

Systems failures occur in all industries. And they all fail in similar ways despite their practical differences. Human systems are no different than software systems which are no different than biological systems from a first-principles perspective because they all share the same underlying truths: the laws of nature.

In the software industry, failures happen all the time. These are called SEVs at Facebook and are measured by downtime across the industry. After any reported SEV or outage, every software company I know of has a rigorous methodology to detect, triage, and explain what the team will do in the future to mitigate the issue. Bugs can still get through despite type-checkers, unit/integration tests, and compilers (all tools software engineers use to prevent problems). Even with these tools at software engineers’ disposal, which run in a matter of seconds to provide quick feedback loops, the rest of the world’s feedback loops are much slower to recognize. Without open dialogue about harder-to-test systems, the people in those systems are hopeless. Even if a system is working as expected today doesn’t mean that it won’t exhibit unexpected behavior tomorrow (see Black Swan.) Positive feedback loops are the most dangerous because they cover up blindspots within the system until they are exposed. A system that ignores feedback has already begun the process of terminal instability. Feedback and dialogue about systems failures is the goal of this blog. Through the study of systems, I can guarantee that we won’t erase future failures. Systems exhibit unexpected behavior; this is their default mode. When systems fail, the system participates in creating the future, not the humans who created it. But to have human success, it is necessary to know how to avoid the most likely ways to fail.

What to Expect

Twice a month, I’ll be exploring a past or current failure and what we should take away from it. The case study will primarily take the following form: identification, how the failure was discovered, describing how the issue was resolved (if it was fixed), and the takeaways we should have about the failure of this particular system so that we don’t repeat its mistake in the future. These case studies take significant research to produce, which is why I’ll be aiming to post these at most twice a month.

However, every Sunday, you can also expect a list of articles, books, podcasts, and more I’ve consumed throughout the week to study systems thinking further. I’m excited to take you along on this journey with me.

Please continue reading to see an example case study.

United Airlines Flight 811

On February 24, 1989, pilots and flight attendants of a United Airlines’ Boeing 747 were prepping for a routine flight from Honolulu to Auckland, New Zealand. The crew was seasoned. Captain David Cronin had logged 28,000 flight hours in Boeing 747’s before that day, First Officer Gregory Slader had logged 14,500 flight hours, and Flight Engineer Randal Thomas had logged 20,000 hours. Additionally, the vessel had accumulated 58,814 flight hours, 15,028 flight ‘pressurization’ cycles and had not been involved in any previous accident. The personnel and the plane were thought to be more than capable of the journey.

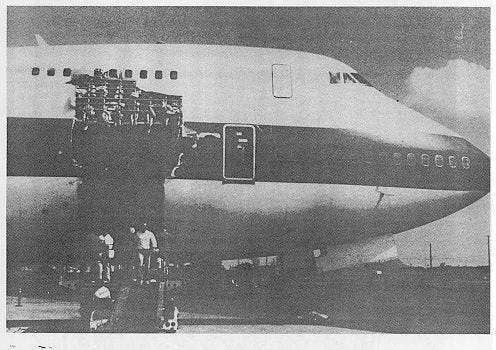

The plane took off out of Honolulu. It climbed past 22,000 feet, and the captain pressurized the cabin to maintain oxygen levels for the passengers. The aircraft had been flying for 17 minutes when the flight crew heard a loud “thump,” quickly followed by an electrical short, which caused the forward cargo door to become unlatched. At altitudes greater than 10,000 feet, a significant pressure differential between the inside and outside air is severe. If the cargo door opened enough to begin leaking air, the pressure difference would cause an explosion similar to a shaken soda bottle. That’s precisely what had happened. The blast blew out the cargo door and ten seats creating a 10-feet by 15-feet hole in the side of the airplane.

The crew had no time to prevent and resolve the catastrophe. The cargo door blew off, and passengers were ejected within 1.5 seconds. The breach to the fuselage had stabilized, but now the crew had secondary issues. Below freezing air was quickly entering the cabin. And due to the depressurization at an altitude of 20,000-30,000 feet, the passengers were experiencing minimal air equivalent to Mount Everest’s summit. Sensors are set up in the plane to detect low pressure, which releases oxygen masks automatically, but the oxygen supply is only expected to last ten minutes. Moreover, the oxygen mechanism might not even work, which is precisely what had happened.

The blast tore out the onboard emergency oxygen supply system. Additionally, debris damaged the number 3 and 4 engines, the right wing’s leading edge, and the vertical and horizontal stabilizers. The crew began dumping fuel to reduce the aircraft’s landing weight. By sheer luck, the team maintained enough control of the plane to perform an emergency descent to reach an altitude where the air was breathable while also performing a 180-degree turn back to Honolulu.

The Investigation

The National Transportation Safety Board immediately launched an investigation. Through its findings, the NTSB learned that the aircraft had experienced intermittent malfunctions in the forward cargo door in the months before the accident. Based on these findings, the NTSB faulted the airline for improper maintenance and inspection due to its failure to identify the damaged locking mechanism. This was helpful insight, but the problem is that the NTSB came to its conclusion without having all of the data. You see, critical pieces of evidence were still missing. A full six months after the NTSB released its report, the airplane’s cargo door was found at the bottom of the Pacific Ocean.

In addition to the NTSB investigation, civilians launched their own investigations based on public information. Additional theories came to light due to the aircraft’s design. Boeing 747s cargo doors at the time were designed with an outward-hinging door, unlike a plug door that opens inward and closes against its frame. The frame then provides a locking mechanism so that the door doesn’t flap open, exposing itself to the outside. Boeing knew about these deficiencies since the 1970s, but these problems were never fully addressed and went largely ignored.

The NTSB re-released its initial report overriding its initial claims that blamed the airline. In its place, the NTSB issued a recommendation for all 747s in service at the time to replace their cargo door latching mechanisms with redesigned locks. Additionally, all aircraft with outward-opening doors were to be replaced by inward-opening doors. As a result, no similar accidents leading to loss of life have officially occurred.

Learn

A LARGE SYSTEM PRODUCED BY EXPANDING THE DIMENSIONS OF A SMALLER SYSTEM DOES NOT BEHAVE LIKE THE SMALLER SYSTEM.

The large system in the Flight 811 case is the electrical system that extends the mechanical system operating the hatch for the cargo door. Electrical systems are excellent but failure-prone when they interact with the outside world. In a closed electrical system, a switch is either on or off. But what happens when the electrical switch is responsible for a mechanical latch that doesn’t close all the way when directed? Especially in the Machine Age, we believe that new systems will act like machines. That the mechanical switch will close when we send an electrical signal to close it. But herein lies the fallacy. A MACHINE ACTS LIKE A SYSTEM. We have our metaphors backward.

The takeaway here is that any system that we attach to an underlying system won’t perform the same as the underlying system. We’ve added an entirely new system or abstraction on top of the underlying system. But the underlying system is still there. We now have all the problems of the underlying system and the problems of the new system that we’ve attached. This doesn’t mean that we shouldn’t add new systems to old systems, but we need to be aware of what we’re doing when we do so. Potential problems exponentially increase, not the reverse.

WHEN A FAIL-SAFE SYSTEM FAILS, IT FAILS BY FAILING TO FAIL SAFE.

Despite their finding that the aircraft had experienced intermittent failures to the cargo door, both the NTSB and Boeing maintained that the door was fail-safe and that the airline and grounds crews caused damage to the mechanism by using it improperly and not servicing it when they detected it was malfunctioning. After all, Boeing and the NTSB maintained that the switch was designed to deactivate the door motors if the handle was locked, and therefore nothing should have happened. Based on their damaged door hypothesis, the NTSB concluded that the accident was a preventable human error and not a problem inherent in the design of the cargo door. Since this was a fail-safe system, there was no backup mechanism in case it failed because it was believed that it would never fail. The NTSB and Boeing were left pointing fingers at other probable causes instead of owning up to the fail-safe system they created. The critical assumption here is that there exist fail-safe systems. Repeat after me: NO SYSTEM IS FAIL-SAFE.

-KYLE

Hi Kyle- Great first post, I’m looking forward to more! It just so happens you hit on an example very similar to my field of work (I am a mechanical engineer in aviation). I wasn’t familiar with Flight 811, so thank you for sharing. It made me think of Failure Modes and Effect Analysis (FMEA), an approach we use to mitigate (though to your point, not absolutely prevent!) the most unacceptable failures. In this case, the door designers would have to brainstorm all the ways the door could fail and the possible causes and effects. Of course, this process is only as good as the expertise of the folks executing it. If no one invites the electrical engineer, you will miss out on some major failure modes. And even if you do include them, many times, people can’t conceive of all the ways things can go wrong until they tragically do. And to your point, complex systems that are components within even larger, more complex systems will ALWAYS have interactions you’re not even aware of.

An FMEA example for reference: https://www.isixsigma.com/wp-content/uploads/2013/05/FMEA-Round-1.gif

Thanks again!